Learn about Markov Decision Processes (MDPs)

Primer for Encyclopedic Knowledge

- Andrey Markov

- Markov property

- Markov Decision Process

- Markov Chains

- Statistical Model

- Control Theory; Control Strategies

- Cybernetics

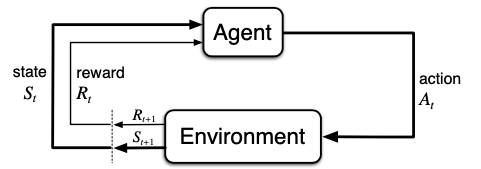

Below is a formal representation of MDPs. Notice how it is simple yet profound. The Agent is an abstract entity interacting with the environment, much like us humans! Although, of course, our reality is much more sophisticated, colorful and nuanced.

You'll notice that the variables along the arrows have a little subscript t which represents each moment in time, or a "step/increment" in time t. The step changes at the dotted line are very similar to taking/using a turn in a board game.

Q-Learning

Q-Learning is a strong reinforcement learning strategy specialized in its ability to learn optimal policies in stochastic environments, making it a powerful tool for a wide range of applications, especially where modeling the environment is complex or impractical.

Just like in the Multi-Armed Bandits scenario, but now we consider a state change after each action. Actions have consequences!

Some background topics:

Q-Learning is a model-free reinforcement learning algorithm that seeks to find the best action to take given the current state. It's known for its ability to compare the expected utility of the available actions without requiring a model of the environment. Essentially, Q-learning focuses on learning a policy that tells an agent what action to take under what circumstances. It works by learning an action-value function that gives the expected utility of taking a given action in a given state and following the optimal policy thereafter.

Here's how it operates:

- Initialization: The Q-values (action-values) are initialized to arbitrary starting values, and the algorithm continually updates them towards their true values.

- Exploration vs. Exploitation: The agent explores the environment and exploits its current knowledge to choose the best action.

- Learning Q-Values: After taking an action, the agent observes the outcome and reward, and updates the Q-value of the previous state and action pair using the Bellman equation.

- Update Rule: The Q-value for a particular state-action pair is updated by taking a weighted average of the old value and the new information based on the reward received and the maximum Q-value for the next state.

By continually updating and exploring state-action pairs, or Q-values, Q-learning can find the optimal action-selection policy for any given finite Markov Decision Process (MDP).

By continually updating Q-values, Q-learning can find the optimal action-selection policy for any given finite Markov decision process (MDP)

Example: Crawling Robot

Breaking down the Crawling Robot

In our crawling robot example, Q-learning helps the robot decide the most effective way to move forward. The robot is placed in various position (states) and must choose actions that will move it towards its goal. The Q-learning algorithm enables the robot to learn which movements (actions) yield the highest rewards through trial and error.

Here's a simplified breakdown of the mechanics behind the robot's movement:

- States: The State is the current situation of the agent, our robot. In this example, the discretized representations of the robot's arm and hand angles. These fall into bucket ranges to help distinguish from a continuous state space.

- Actions: The choice of action depend on the actions available in any given state, and the current policy for selection. The robot's actions involve moving the arm or hand up or down. These are represented as discrete choices.

- Rewards: Rewards are given to the agent after performing an action in a certain state which leads to the next state. This reward is used to guide, or nudge, the agent into moving into the right direction through each transition. Hence, State>Action>Reward>State>Action. For our robot crawling with a simulated arm, we calculate the change in the robot's position along the x-axis. A positive reward is given for forward movement, and no reward (or negative reward) for no movement or backward movement.

Learning Q-values with Q-learning

The Q-learning agent is not included in the provided code, but it would typically function as follows:

- Initialization: A Q-learning agent begins by initializing a table of Q-values for each state-action pair, typically starting at zero.

- Exploration/Exploitation: The agent must decide whether to explore new actions randomly or exploit its current knowledge to select the best action based on existing Q-values.

- Action Execution: Upon selecting an action, the agent performs it using the

doActionmethod, which in turn provides the new state and resulting reward. - Q-value Update: The agent updates the Q-value for the prior state-action pair with the new data using the Bellman equation:

Q(s, a) = Q(s, a) + alpha * (reward + gamma * max(Q(s', a')) - Q(s, a)), wheresis the previous state,ais the action taken,s'is the new state,alphais the learning rate, andgammais the discount factor. - Iteration: This process is repeated over many iterations, allowing the agent to gradually improve its Q-values and, by extension, its policy.

Using special tricks with the parameters, randomness, and speeding up the timescale...